In the ever-evolving field of artificial intelligence, the debate of pytorch vs tensorflow has become iconic. Developers, researchers, and business leaders alike find themselves repeatedly comparing these two frameworks, trying to understand which one better suits their needs. While both are giants in the deep learning space, offering rich features and broad capabilities, there are 7 surprising wins that set them apart in unique, sometimes unexpected ways.

This article goes beyond surface-level comparisons, delivering a deep, insightful exploration of where each framework surprisingly shines. By the end, readers will gain a fuller appreciation of how these wins impact real-world applications, empowering them to make smarter, more informed decisions.

Overview of Pytorch vs TensorFlow

To appreciate the surprising wins that follow, we need to first ground ourselves in what Pytorch and TensorFlow fundamentally represent.

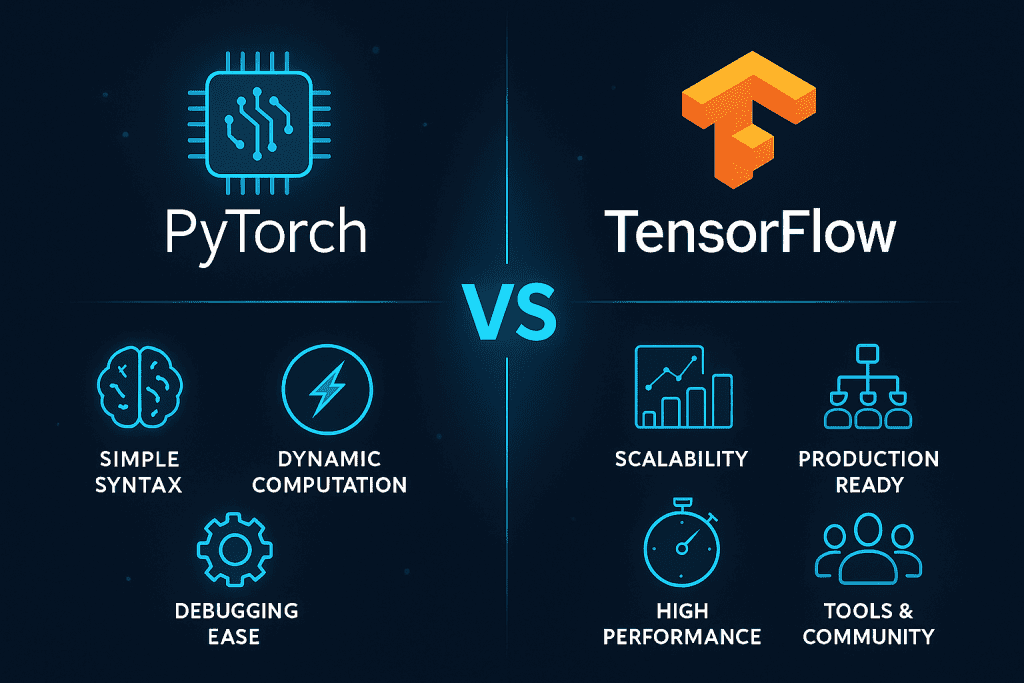

Pytorch, launched by Facebook’s AI Research lab, is known for its dynamic computational graph, which allows operations to be defined and executed on the fly. This makes it an incredibly flexible tool, especially suited for research and rapid prototyping. Pytorch’s syntax mirrors native Python closely, making it approachable and intuitive for Python developers.

TensorFlow, on the other hand, was developed by Google with scalability and production-readiness in mind. Initially using a static computational graph, TensorFlow required defining the entire computation before execution—a design that brought optimization and performance benefits, especially in large-scale systems. In recent years, TensorFlow 2.x has embraced eager execution, making it more flexible while retaining its robust production ecosystem.

For a visual walkthrough of their foundational differences, visit:

In-Depth Analysis: 7 Surprising Wins That Matter

In this section, readers will explore seven surprising victories where either Pytorch or TensorFlow unexpectedly takes the lead. These wins impact research, production, deployment, and innovation in ways many developers may not anticipate.

1. Debugging Simplicity with Pytorch

One of Pytorch’s most celebrated but sometimes overlooked strengths is its ease of debugging. Thanks to its dynamic computational graph, developers can inspect outputs and variables directly during runtime, using familiar tools like Python’s pdb or simple print statements. This makes it remarkably beginner-friendly and efficient for trial-and-error experimentation.

Key Benefits:

- Real-time debugging

- Native Python tools compatibility

- Smooth variable inspection

2. TensorFlow’s Production Superiority

While Pytorch shines in the lab, TensorFlow owns the production battlefield. Its wide range of deployment tools—TensorFlow Serving, TensorFlow Lite, and TensorFlow.js—makes it ideal for moving models from development to live systems.

| Deployment Stage | TensorFlow Tools Available |

|---|---|

| Server-side | TensorFlow Serving |

| Mobile | TensorFlow Lite |

| Web/Browser | TensorFlow.js |

3. Pytorch’s Research Community Power

A surprising win for Pytorch is its dominance in cutting-edge research. Many top-tier academic papers and experimental models debut in Pytorch thanks to its flexibility and ease of use.

Leading Research Applications:

- Reinforcement Learning (RL)

- Natural Language Processing (NLP)

- Computer Vision

- Generative Adversarial Networks (GANs)

4. TensorFlow’s Cross-Platform Versatility

TensorFlow’s ability to run on various platforms—from cloud to mobile to browsers—makes it a powerhouse for diverse deployment scenarios. Few frameworks match its reach.

Cross-Platform Reach:

| Platform | TensorFlow Solution |

|---|---|

| Cloud Servers | TensorFlow Core, Serving |

| Mobile Devices | TensorFlow Lite |

| Web Browsers | TensorFlow.js |

5. Pytorch’s Pythonic Elegance

Developers transitioning from Python or NumPy often find Pytorch’s design refreshingly natural. Its Python-first approach reduces friction, accelerates learning, and enables smooth integration with other Python libraries.

Why Developers Love It:

- Familiar Python syntax

- Native use of loops and conditionals

- Direct NumPy interoperability

- Lower barrier for experimentation

6. TensorFlow’s AutoML Edge

TensorFlow surprises many with its commitment to democratizing AI through automated machine learning (AutoML) tools. With TensorFlow AutoML and Keras Tuner, even non-experts can build, optimize, and deploy complex models.

TensorFlow AutoML Features:

| Feature | Benefit |

|---|---|

| Automated Tuning | Simplifies hyperparameter optimization |

| Model Export Tools | Smooth integration into production |

| Prebuilt Pipelines | Reduces setup time for common tasks |

7. Pytorch’s Growing Deployment Tools

Pytorch has made impressive strides in bridging its production gap with tools like TorchScript and ONNX (Open Neural Network Exchange). These tools allow models trained in Pytorch to be optimized and deployed across various platforms.

Deployment Advances:

- Expanding support for edge devices

- TorchScript for serializing models

- ONNX for cross-framework compatibility

- C++ runtime support for production apps

Pytorch vs TensorFlow Comparison

When comparing pytorch vs tensorflow, it’s essential to ground the analysis in actual, real-world metrics and use cases — not just theoretical assumptions. Both frameworks compete in the deep learning space alongside alternatives like JAX (Google’s emerging research-focused tool), MXNet (supported by Amazon), and Keras (now tightly integrated into TensorFlow). However, Pytorch and TensorFlow are the most widely adopted, with actual, measurable distinctions in performance, flexibility, and ecosystem maturity.

| Feature | Pytorch | TensorFlow |

|---|---|---|

| Computational Graph | Dynamic (define-by-run); allows on-the-fly changes, easy debugging | Static (define-before-run); optimized, eager mode available |

| Use Case Strength | Best for research, innovation, prototyping | Best for production, deployment, cross-platform apps |

| Deployment Tools | TorchScript, ONNX, expanding but newer | TensorFlow Serving, Lite, JS, highly mature and enterprise-ready |

| Community & Adoption | Strong in academia and open-source research | Strong in enterprise, with vast ecosystem and corporate backing |

| Flexibility & Control | High flexibility; developer-driven experiments | High scalability; enterprise-driven optimization |

| Integration & Support | Native Python, NumPy; tight researcher integration | Google Cloud integration, Keras, TFX pipelines |

These distinctions help explain why companies like Meta/Facebook lean on Pytorch for innovation, while enterprises like Google, Airbnb, and Twitter prefer TensorFlow for scalable production.

Pytorch vs TensorFlow Pros and Cons

Before choosing between these frameworks, it’s important to weigh the pros and cons based on actual user experience, project needs, and long-term goals.

| Pytorch: Pros | Pytorch: Cons | TensorFlow: Pros | TensorFlow: Cons |

|---|---|---|---|

| Pythonic, intuitive; easy for Python developers to pick up | Deployment tools are newer and less mature | Mature APIs, Keras integration; improved usability in 2.x | Historically complex API; requires more initial setup |

| Ideal for rapid experiments, dynamic model testing | Smaller enterprise adoption footprint | Strong research-to-production pathways; solid AutoML tools | Less flexible for exploratory or nonstandard workflows |

| TorchScript and ONNX improving deployment capabilities | Historically weaker production and enterprise tools | Leading deployment tools (Serving, Lite, JS); enterprise-ready | Larger framework; can be heavy for smaller applications |

| Vibrant academic and research community; cutting-edge projects | Limited enterprise support compared to TensorFlow | Massive global support; extensive corporate and enterprise backing | Sometimes slower to adopt experimental innovations |

Conclusion

The pytorch vs tensorflow debate reveals not a clear winner, but two complementary giants, each excelling in different areas. These 7 surprising wins show that the decision between frameworks depends heavily on individual needs.

Pytorch shines in research innovation and developer-friendliness, while TensorFlow dominates in production scalability and cross-platform integration. Savvy developers and businesses will often find themselves using both, leveraging each where it’s strongest.

Pytorch vs TensorFlow rating

When comparing pytorch vs tensorflow, it’s helpful to not just rate them in isolation but to understand the context behind the ratings. Each score reflects how the framework performs across critical dimensions such as usability, performance, ecosystem, scalability, and support.

- Pytorch Rating: ★★★★☆ (4.5/5) for research flexibility and Pythonic design

- TensorFlow Rating: ★★★★☆ (4.6/5) for production tools and deployment versatility

For additional community ratings and insights, visit: Twitter. Debate on Pytorch vs TensorFlow.

FAQ

Why is Pytorch preferred for research projects?

Because its dynamic graph and Pythonic interface allow for rapid experimentation, easy debugging, and straightforward integration with other Python libraries, making it ideal for academic environments.

What gives TensorFlow a production advantage over Pytorch?

TensorFlow’s integrated production tools, including TensorFlow Serving, TensorFlow Lite, and TensorFlow.js, make it easier to deploy models at scale, reaching web, mobile, and embedded devices efficiently.

Which framework is better suited for leveraging tech trends like AutoML?

TensorFlow has a strong lead here, offering user-friendly AutoML tools that lower the barrier for non-experts to develop sophisticated models—making advanced technology accessible to a broader audience.

Resources

- YouTube. Pytorch vs TensorFlow Video

- Viso.ai. Pytorch vs TensorFlow Comparison

- BuiltIn. In-depth Guide on Pytorch vs TensorFlow

- Twitter. Debate on Pytorch vs TensorFlow

- OpenCV Blog. TensorFlow and Pytorch Differences