Technology never stands still. Each year, new Tech Trends emerge that disrupt the status quo. One of the most transformative is Edge Computing.

Edge Computing moves data processing closer to where data originates. Instead of relying fully on centralized cloud servers, it allows devices or local servers to handle computation. This reduces delays (latency), saves bandwidth, and enhances privacy.

I first encountered this while working on a smart agriculture project. Sensors in remote fields produced torrents of data—soil moisture, weather changes, drone footage—but internet connection was spotty. Using this, much of the data was processed directly on-site. The result was faster alerts and more reliable operations even when cloud access dropped.

In the world of futuristic technology, understanding Edge Computing matters more than ever. It underpins everything from real-time AI to autonomous vehicles.

What is Edge Computing?

Edge Computing is a distributed computing paradigm that brings enterprise applications, data processing, and analytics closer to data sources—such as IoT devices, user devices, or local edge servers—rather than depending solely on distant cloud data centers.

Synonyms or related terms include fog computing, edge intelligence, and sometimes distributed computing at the edge. All refer to moving computation and storage nearer to where data is produced.

It aims to deliver faster insights, reduce bandwidth costs, increase response speed, and improve data control.

Breaking Down Edge Computing

To understand this, it helps to look at its components, how it differs from cloud, and real-world examples.

First, what problem does Edge Computing solve? As IoT devices multiply—sensors, cameras, wearables—they generate huge volumes of data. If every data point had to travel to the cloud, network congestion, delays, and costs rise sharply. It alleviates these issues by doing processing locally.

Key Components

- Edge Devices

These are the sources of data: sensors, wearables, smart cameras, industrial machines. They often do some pre-processing. - Edge Nodes / Gateways / Local Servers

Devices or servers close to the edge that process, filter, or aggregate data. They might host analytic logic, lightweight AI models, or decision-making routines. - Edge Infrastructure / Edge Cloud

Sometimes there are tiers: local nodes, micro data centers, edge clouds. These supplement or offload work from central cloud servers. Systems must balance what is processed locally vs. what goes to the cloud. - Connectivity and Networking

Technologies like 5G, local networks, or specialized links matter. They help enable fast, reliable communication. Edge systems often depend on intermittent connectivity or variable bandwidth.

How It Differs from Cloud Computing

- Latency: Cloud computing often introduces delays because data must travel long distances. Edge Computing nearly eliminates that by processing on or near the source.

- Bandwidth Use: Edge reduces the amount of data needing transfer to central servers. Only essential data is sent.

- Reliability: With Edge, systems can operate even with poor or no internet connection. Local decisions can be made.

- Security & Privacy: Keeping data local reduces exposure risk during transmission. More control over where sensitive data is stored.

Examples

- In industrial settings, machinery sensors detect anomalies locally and send alerts without waiting for cloud processing. That can prevent breakdowns.

- Smart traffic systems use Edge Computing so that traffic lights adjust in real time based on local sensor data rather than relying on centralized control.

- Retail stores deploy real-time video analytics on edge servers for security and customer behavior insights.

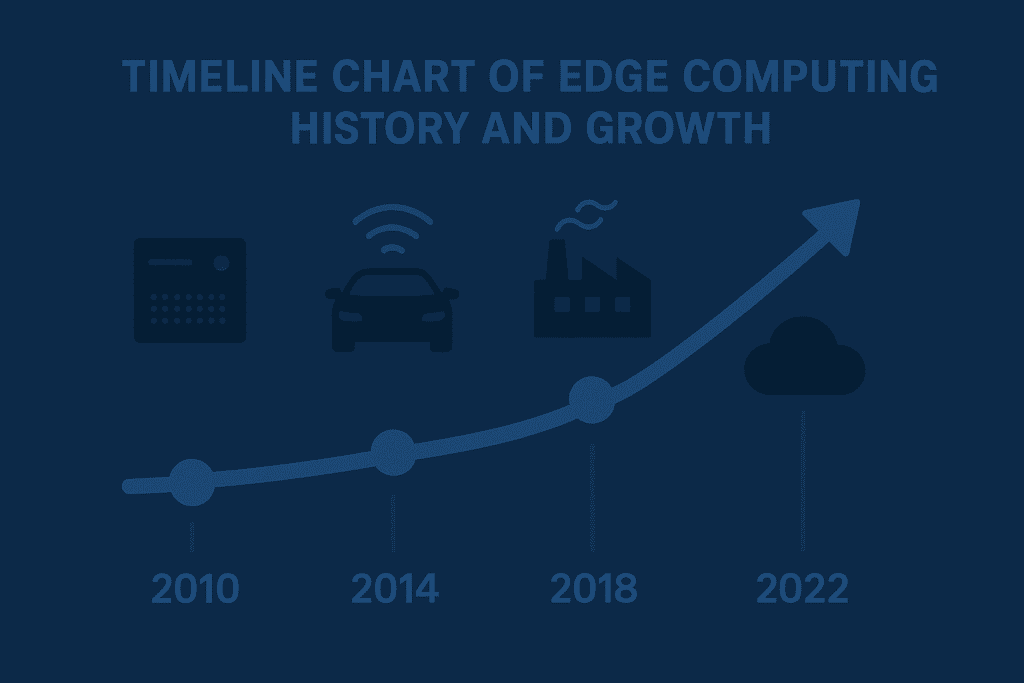

History of Edge Computing

Here’s how Edge Computing evolved over time, from early computing models to today’s advanced applications.

| Period | Development |

|---|---|

| Pre-1990s | Computing was local: mainframes, monolithic servers, limited network capacity. |

| 1990s | Emergence of content delivery networks (CDNs) distributing content closer to users. |

| Early 2000s | Growth of mobile computing, rise of Internet of Things (IoT); cloud computing becomes popular. |

| 2010s | “Fog computing” term appears; early edge frameworks; edge devices become powerful enough for local processing. |

| Mid/late 2010s | Standardization efforts like OpenFog Consortium; stronger hardware, improved protocols; edge combined with 5G networks. |

| 2020s-present | Widespread adoption in healthcare, autonomous systems, smart cities; edge AI emerges; deployments scale up. |

Historically, the concept of processing close to data sources isn’t new. What’s new is enabling large scale, using modern tools, networking, and advanced technology.

Types of Edge Computing

Device Edge

This is the most direct form. Processing happens on the actual device that generates the data—like smartphones, security cameras, or IoT sensors. Because the work stays local, responses are nearly instant.

For example, a smartwatch can analyze heart rate data in real time and send an alert if it detects irregularities. It doesn’t wait for a cloud server. This makes Device Edge crucial for healthcare, wearables, and any system where immediate feedback matters.

Network Edge

Here, data moves from the device to a nearby gateway or router before processing. These nodes handle information from many devices at once, filter out what’s unnecessary, and then forward only important data to the cloud.

Telecom providers use Network Edge heavily. With the rollout of 5G, edge nodes inside base stations allow mobile apps, gaming, and streaming to run faster and more smoothly.

Edge Cloud / Micro Data Centers

This type sits between local devices and the public cloud. Small-scale, regional servers or micro data centers handle workloads that devices or gateways can’t manage. They balance speed with computing power.

Think of autonomous cars sending data to a nearby edge data center that coordinates traffic across a city. It’s too heavy for the car alone but too slow for a distant cloud.

Hybrid Edge Models

Some systems combine multiple edge approaches. A drone may process navigation data on-device (Device Edge), transmit live video through a gateway (Network Edge), and rely on a nearby edge data center for heavier AI analysis (Edge Cloud).

Hybrid models are increasingly common as businesses blend flexibility with performance.

| Type | Location | Example Use Case |

|---|---|---|

| Device Edge | On-device (sensor, smartphone, IoT) | Smartwatches analyzing health metrics |

| Network Edge | Local gateways, routers, telecom base | Gaming, telecom, traffic management |

| Edge Cloud / Micro DC | Nearby regional servers | Smart cities, autonomous transportation |

| Hybrid Edge Models | Mix of device, network, and micro DC | Drones, robotics, advanced industrial IoT |

How does it work?

This works by distributing computation tasks to the nearest possible location where data is being generated.

- Data is generated by devices or sensors.

- Local filtering or preprocessing occurs on the device or in an edge node.

- Critical operations or decisions are made locally.

- Non-urgent or bulk data may be sent to the cloud for long-term storage or deeper analysis.

This model ensures faster responses. For instance, in a healthcare monitoring system, the device detects irregular heart patterns and triggers local alarms instead of sending everything to a distant cloud first.

Pros & Cons

Here are the benefits and drawbacks of Edge Computing:

| Pros | Cons |

|---|---|

| Lower latency, faster responses | More complex architecture to manage |

| Reduced bandwidth usage and network costs | Higher hardware and maintenance costs for many distributed nodes |

| Improved reliability when connectivity is spotty or intermittent | Security challenges at more endpoints |

| Greater data privacy and regulatory compliance | Potential inconsistencies; need for strong orchestration |

| Supports real-time AI and critical applications | Scalability and resource management are tougher |

Edge Computing shines where speed, real-time response, or privacy matter most. But it demands careful planning and investment.

Uses of Edge Computing

Edge Computing is not a theoretical idea—it powers many real systems across industries.

Here are several applications and how they use Edge Computing in practice:

Healthcare

Hospitals employ Edge Computing to monitor patient vitals in real time. Local devices alert staff if something goes wrong—before cloud latency could create delay. It enables telemedicine devices to function reliably even in lower bandwidth settings.

Autonomous & Smart Transportation

Self-driving cars, drones, and traffic systems rely on Edge Computing. Vehicles process sensor data locally to make split-second decisions. Traffic infrastructure adjusts signals based on current flow. These systems demand low latency and reliability.

Industrial / Manufacturing

Factories use sensors on equipment to detect faults, predict maintenance, and even shut down machinery automatically if unsafe conditions arise. Edge Computing helps minimize downtime and improves safety.

Retail & Smart Retailers

Retailers implement Edge Computing for video analytics, in-store customer behavior tracking, smart shelves, and local checkout systems. They reduce lag, improve user experience, and sometimes enhance security.

Telecommunications & 5G

Telcos use Edge Computing to enable low-latency communications, support high bandwidth applications like AR/VR, gaming, live streaming. 5G infrastructure complements Edge Computing by offering faster connections and more distributed network architecture.

Smart Cities & IoT Ecosystems

Cities use Edge Computing in traffic management, public safety (cameras with local processing), environment monitoring, power grid management. IoT networks in such settings generate massive data—Edge helps cut down transmission delays and bandwidth use.

Challenges and Future Directions

Edge Computing offers many benefits, but it also introduces challenges. Understanding these helps in making smart decisions.

Security & Privacy Issues

With more devices and more edge nodes, the attack surface increases. Ensuring secure firmware, data encryption, and consistent updates is vital.

Scalability & Resource Management:

Managing many distributed nodes, balancing loads, and ensuring consistent performance across unpredictable environments is complex.

Interoperability & Standards

Diverse hardware, protocols, and software make integrations tricky. Efforts like the OpenFog Consortium aim to standardize architectures.

Connectivity & Offline Capability

Edge systems must gracefully handle network disruptions. Local operations become crucial when cloud is unavailable.

Looking ahead, Innovation will come in several forms:

- Edge AI / Edge Intelligence: Running machine learning models at the edge, enabling inference locally.

- Tighter cloud-edge integration: Hybrid architectures that dynamically decide where data should be processed.

- Better hardware and energy-efficient devices: Edge devices becoming more powerful while using less power.

- Regulation and data governance: Stricter data laws may favor Edge Computing to keep sensitive data local.

Resources

- IBM. Edge Computing

- TechTarget. Definition of Edge Computing

- Fortinet. Edge Computing Explained

- GeeksforGeeks. Edge Computing in Computer Networks

- Science News Today. What is Edge Computing?