The first time I heard someone ask, what is a GPU, I was sitting next to a friend who was showing me his new gaming computer. He clicked a button, and suddenly his screen transformed into a vivid, lifelike world with moving shadows, glowing lights, and explosions that looked straight out of a Hollywood film. I was blown away. That moment taught me that GPUs weren’t just pieces of hardware—they were portals into entirely new experiences.

Fast forward to today, and GPUs have moved far beyond just gaming. They’ve become one of the hottest technology trends, driving progress in artificial intelligence, creative industries, and even scientific research. If the CPU is the steady brain of your computer, then in its imagination—constantly drawing, calculating, and shaping what you see and what machines can learn.

What is a GPU?

So, let’s break it down clearly. A GPU, or Graphics Processing Unit, is a processor built specifically to handle graphics and data-heavy calculations. Some people casually call it a graphics card or video card, but technically those terms usually refer to the whole device that houses the Graphics chip along with memory and cooling systems.

Think of it this way: the CPU is the careful planner who checks off a to-do list one item at a time, while the Graphics card is the creative painter who brings a canvas to life by working with thousands of tiny brushes all at once. This ability to work in parallel makes Graphics cards especially powerful for tasks that demand speed and precision.

Breaking Down a GPU

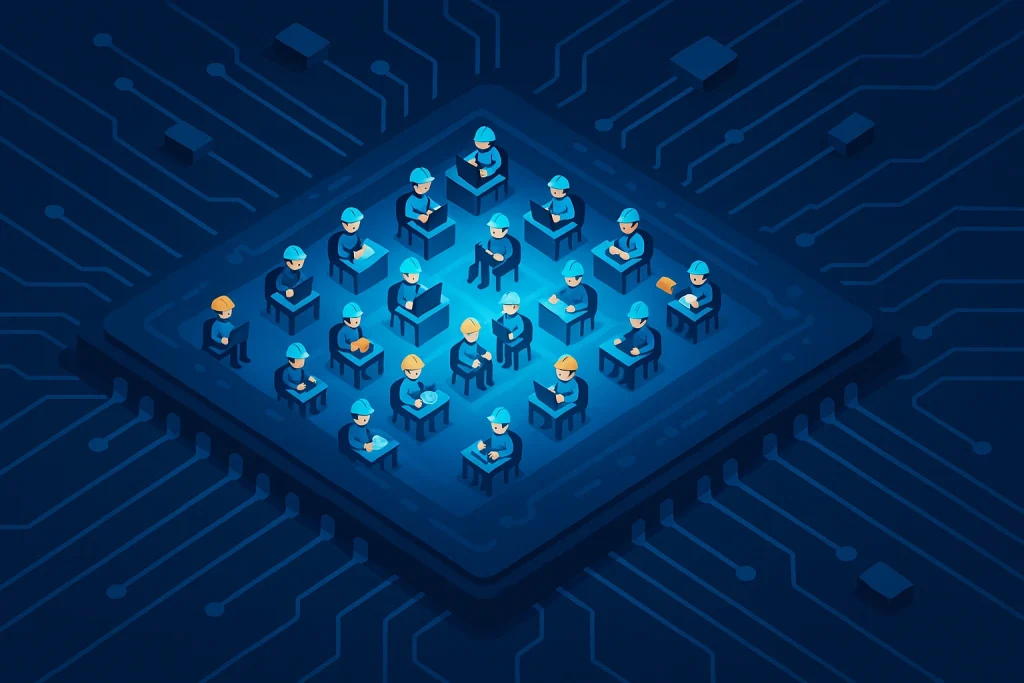

Now that we know what is a GPU, let’s peek inside. A Graphics Processing Unit(GPU) is made up of thousands of smaller processing units called cores. Each core is designed to handle a very small task quickly. When you combine thousands of them, suddenly massive jobs—like rendering a realistic 3D city or training an AI model—become manageable.

Here are a few of the most important components:

Cores

These are tiny processors inside the GPU that handle parallel tasks simultaneously. The more cores a graphics card has, the more efficiently it can manage complex operations such as rendering frames, running AI models, or powering 3D applications.

Shaders

Specialized units designed to calculate lighting, shading, and texture effects in real time. Shaders bring digital environments to life by simulating how light interacts with surfaces, making games and visual applications look more realistic.

VRAM (Video RAM)

This is the dedicated high-speed memory that stores textures, images, and other graphics data for instant access. Larger amounts of VRAM allow smoother performance, higher resolutions, and the ability to handle demanding tasks like 4K gaming or 3D rendering without stuttering.

Cooling systems

Graphics cards generate significant heat when under heavy load. Cooling systems—whether traditional fans or advanced liquid cooling solutions—are critical for maintaining safe temperatures. They not only improve stability and lifespan but also prevent throttling, which occurs when a card reduces performance to avoid overheating.

History of GPUs

The journey of GPUs is as fascinating as the technology itself. Back in the 1980s, personal computers struggled with even the simplest graphics. Early 2D accelerators could barely manage colored windows and text without lag.

Then, the 1990s arrived, and so did the golden era of gaming. People wanted 3D graphics, and companies like NVIDIA and ATI raced to meet demand. In 1995, NVIDIA released the NV1, an early attempt at 3D graphics. Just four years later, they introduced the GeForce 256 and officially coined the term “GPU.” That moment marked a turning point—not just for gamers, but for computing as a whole.

| Year | Milestone |

|---|---|

| 1980s | 2D accelerators appear for personal computers |

| 1995 | NVIDIA releases NV1 with 3D capabilities |

| 1999 | GeForce 256 named the first “GPU” |

| 2006 | CUDA lets Graphics Cards handle general computing tasks |

| 2010s | Revolutionize AI, crypto, and creative workflows |

As gaming grew, so did Graphics Card. By the 2010s, researchers discovered that Graphics Cards weren’t just good at graphics—they were perfect for AI training. Suddenly, Graphics Cards weren’t just for fun. They were shaping the future.

Types of GPUs

Integrated GPUs

Integrated Graphics are built directly into the CPU. They’re lightweight, energy-efficient, and perfect for everyday activities like watching Netflix, browsing, or working in spreadsheets. A college student with a budget laptop probably relies on integrated graphics without even knowing it.

Dedicated GPUs

Dedicated Graphics Processing Unit are separate pieces of hardware with their own memory. They’re the beasts behind high-performance gaming rigs, AI research, and professional design stations. If you’ve ever seen a massive graphics card glowing inside a gamer’s PC, you’ve seen a dedicated GPU.

External GPUs (eGPUs)

For those who want the portability of a laptop but the power of a desktop, external Graphics Cards are lifesavers. Housed in sleek boxes, they connect via Thunderbolt ports and give laptops an instant performance boost. Filmmakers often use them on the go to render videos without lugging around a workstation.

How Does a GPU Work?

At its core, a Graphics Card is all about teamwork. When software—like a game or a machine learning framework—sends instructions, the Graphics Processing Unit breaks them into thousands of smaller jobs. Each core then handles its piece simultaneously.

Here’s how it flows:

- The software sends tasks to the Graphics Card.

- The Graphics Card splits those tasks into smaller chunks.

- Cores process everything in parallel.

- The final result appears on your screen, or feeds back into the software if it’s not graphics-related.

Think about playing a game like Fortnite. Every texture, shadow, and character movement happens because the Graphics Processing Unit is handling countless calculations every second. Or imagine an AI generating artwork—it’s the GPU making those lightning-fast creative leaps possible.

Pros & Cons of a GPU

Graphics Cards feel like superheroes, but even superheroes have flaws.

| Pros | Cons |

|---|---|

| Incredible parallel performance | Expensive for high-end models |

| Essential for gaming & AI | High energy consumption |

| Reduces CPU workload | Can overheat if poorly cooled |

| Enhances creative productivity | Needs frequent driver updates |

For gamers, the pros are obvious: smoother gameplay, stunning visuals, and less lag. For researchers, it’s faster results. But if you’ve ever seen your electricity bill spike after running Graphics Processing Unit-heavy tasks like crypto mining, you know the cons are very real.

Uses of a GPU

Graphics Cards have become indispensable across industries. Let’s look at where they shine the brightest.

Gaming

Gamers have always been the earliest adopters of Graphics Cards. From realistic landscapes to lifelike characters, Graphics Cards transform virtual worlds into immersive experiences that feel alive.

Artificial Intelligence

AI researchers depend on Graphics Processing Unit to process massive datasets. Training a neural network without a Graphics Processing Unit could take months. With a Graphics Processing Unit, it might take only days. This is why breakthroughs in AI, like self-driving cars or language models, are happening so quickly.

Creative Industries

From video editors cutting films to animators building 3D characters, creative professionals rely on GPUs to render their visions faster. Less time waiting on a loading bar means more time focusing on artistry.

Scientific Research

GPUs don’t just create entertainment—they save lives. Scientists use them for weather simulations, genetic sequencing, and even space research. NASA, for instance, uses GPU-powered simulations to prepare for missions.

Resources

- NVIDIA: The History of the GPU.

- AMD: GPU Architectures Explained

- Intel.: Integrated Graphics Overview

- TechRadar: Best Graphics Cards Reviewed

- Tom’s Hardware: Latest GPU Benchmarks