In the rapidly evolving world of machine learning, keeping up with the best tools can feel overwhelming. One standout that continues to make waves is PyTorch Lightning, a powerful wrapper for PyTorch that helps simplify the process of building and scaling deep learning models. For those in the research, academic, or industry space, understanding PyTorch Lightning’s role and capabilities can greatly boost both productivity and creativity. This article offers an engaging and comprehensive look at what it is, how it works, and why it has become essential in today’s deep learning ecosystem.

What is PyTorch Lightning?

This section introduces the reader to the core concept of PyTorch Lightning, offering a clear definition and placing it within the broader context of machine learning frameworks.

PyTorch Lightning is best described as a lightweight, open-source wrapper for PyTorch that abstracts away much of the repetitive engineering work involved in deep learning projects. Instead of managing GPU devices, distributed training, and logging setups manually, PyTorch Lightning handles these details behind the scenes. This allows researchers, developers, and engineers to focus on what truly matters: designing and refining the core machine learning model.

While some refer to it as a “PyTorch research framework” or “scalable PyTorch toolkit,” the heart of Lightning remains grounded in pure PyTorch, ensuring users can access the full flexibility of the underlying library without the boilerplate.

Breaking Down PyTorch Lightning

This section breaks the definition into digestible components, showing readers how the framework is structured and why it simplifies complex tasks.

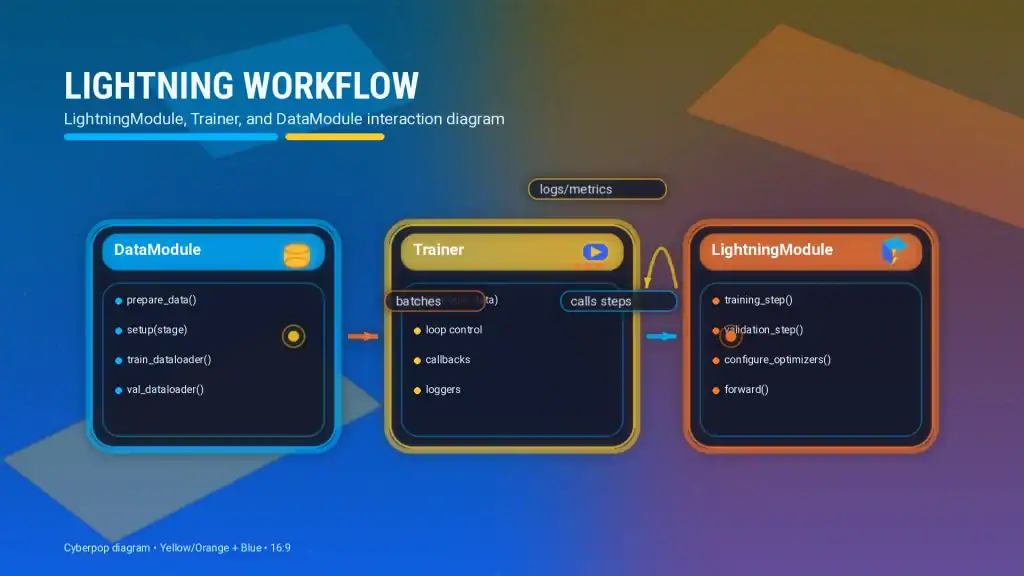

PyTorch Lightning organizes a deep learning project into three main components:

- LightningModule: Contains the model’s architecture, the forward pass, and key methods for training, validation, and testing.

- Trainer: Responsible for managing the training loop, handling checkpoints, early stopping, and learning rate adjustments.

- DataModule: Separates data loading, transformations, and preparation, making it easier to reuse and scale datasets across experiments.

For example, instead of writing extensive loops and device management logic, a user can simply call trainer.fit(model, datamodule). Whether training on a single GPU, multiple GPUs, or even across multiple nodes, PyTorch Lightning smoothly manages the logistics, allowing the user to adjust a few settings and scale effortlessly.

History of PyTorch Lightning

This section provides context, tracing the origins and development journey of the framework, giving the reader a sense of its credibility and growth.

PyTorch Lightning was created by William Falcon during his PhD research at New York University. Faced with the challenge of managing complex, large-scale experiments, Falcon designed Lightning to help researchers prioritize innovation over technical overhead.

Over the years, Lightning has grown from a niche tool to a widely adopted framework supported by the open-source community and the Lightning AI platform. Its timeline reflects this steady rise:

| Milestone | Year |

|---|---|

| Initial commit | 2019 |

| First official release | 2020 |

| Lightning AI platform launch | 2022 |

| Expanded industry adoption | 2023–present |

With each release, the framework has expanded its capabilities, integrating better logging tools, optimization techniques, and broader hardware support.

Types of PyTorch Lightning

This section offers a deeper dive into the different types or layers of PyTorch Lightning, giving readers a clearer picture of how the framework adapts to a wide range of use cases—from quick experiments to complex, production-ready pipelines. By understanding these types, developers and researchers can better match the right tools to their specific challenges, maximizing both productivity and performance.

LightningModule

The LightningModule is the heart and soul of any PyTorch Lightning project. It wraps the traditional PyTorch model inside a well-structured interface, where the user defines the forward pass, loss function, optimizers, and important steps like training, validation, and testing.

This module shines in academic settings and research labs where clarity and reproducibility are paramount. By separating the engineering from the modeling logic, researchers can test hypotheses quickly, make cleaner pull requests, and collaborate more effectively with peers. Additionally, the LightningModule integrates seamlessly with other PyTorch components, making it an excellent starting point for anyone familiar with the PyTorch ecosystem but eager to reduce clutter and improve code maintainability.

Lightning Flash

For those looking for speed and convenience, Lightning Flash offers a delightful solution. Built on top of the LightningModule, Flash provides ready-to-use components for common tasks such as image classification, object detection, text classification, and speech recognition.

What makes Flash special is its plug-and-play architecture. Instead of spending hours (or even days) writing boilerplate or configuring custom pipelines, developers can import a prebuilt task, plug in their dataset, and start training almost immediately. Flash is particularly popular in hackathons, startups, and prototyping teams where time is limited but experimentation is critical. Moreover, its simple, user-friendly API lowers the barrier for beginners who want to experiment with deep learning without mastering every fine-grained detail.

Lightning Fabric

Lightning Fabric is where power users and industry veterans come to play. Fabric offers a lower-level interface that gives developers fine-grained control over their training loops, optimization strategies, and distributed systems—while still providing Lightning’s hardware abstraction and performance benefits.

In large companies and cutting-edge production environments, teams often need to squeeze every ounce of performance out of their infrastructure. They may need to run custom training loops, fine-tune memory allocations, or integrate Lightning with external services. Fabric is designed for these scenarios, offering flexibility without sacrificing scalability. With Fabric, users can confidently scale their models across dozens (or even hundreds) of GPUs, implement custom parallelism strategies, and maintain robust control over experiment tracking.

Lightning Apps

An exciting new addition to the Lightning ecosystem is Lightning Apps, which allows developers to build and deploy full AI applications on top of Lightning’s powerful engine. Lightning Apps bring the simplicity of Lightning to the world of AI workflows, making it easier to orchestrate multi-stage pipelines, integrate third-party tools, and deploy end-to-end solutions without reinventing the wheel.

How Does PyTorch Lightning Work?

This section explains the inner workings, providing a behind-the-scenes look at how the framework streamlines engineering tasks.

At its core, PyTorch Lightning automates the repetitive tasks that typically clutter deep learning projects. This includes:

- Handling device placement (CPU, GPU, TPU)

- Managing mixed-precision training (FP16)

- Logging metrics and visualizations with tools like TensorBoard and WandB

- Automatically saving checkpoints and resuming training after crashes

- Simplifying multi-node and multi-GPU training setups

This design reduces human error and boosts reproducibility, two critical concerns in both research and production environments. Essentially, PyTorch Lightning lets users write the what (model logic) while it manages the how (execution flow).

PyTorch Lightning Pros & Cons

This section offers a balanced overview of PyTorch Lightning’s advantages and potential drawbacks, helping readers make informed decisions.

| Pros | Cons |

|---|---|

| Dramatic reduction in boilerplate code | Slight learning curve for beginners |

| Easy scaling across multiple GPUs and nodes | Abstracts some low-level PyTorch mechanics |

| Seamless integration with logging tools | Adds an extra layer of complexity for experts |

| Robust community and documentation | Rapid updates may require ongoing adaptation |

| Strong focus on reproducibility and clarity | Some features still maturing or experimental |

While no framework is perfect, PyTorch Lightning’s benefits often outweigh its limitations, especially for teams aiming to scale deep learning workloads efficiently.

Uses of PyTorch Lightning

This section explores the wide-ranging applications of PyTorch Lightning, demonstrating how its features translate into real-world benefits across different industries and domains. Whether in research labs, bustling startups, or massive corporate systems, PyTorch Lightning helps professionals work smarter, faster, and with greater confidence.

Academic Research

In the world of academic research, reproducibility is king. Researchers often need to share their code with colleagues, supervisors, or collaborators around the globe. PyTorch Lightning ensures that the engineering setup is standardized, making experiments easier to replicate. Instead of wrestling with device setups or custom training loops, academics can zero in on novel algorithms, optimization strategies, and fresh hypotheses. This streamlined workflow not only accelerates publication timelines but also improves collaboration across research groups and universities.

Moreover, since academic environments often run on limited resources, Lightning’s scalability—being able to shift from a single laptop to a multi-GPU cluster with minimal changes—makes it a practical, cost-effective solution.

Industry Applications

In industry, where time is money, PyTorch Lightning proves its worth by enabling companies to train models faster, deploy them more efficiently, and monitor their performance with greater accuracy. Industries such as autonomous driving, healthcare diagnostics, e-commerce recommendation systems, and financial forecasting rely on enormous datasets that demand robust, scalable architectures.

For example, in the autonomous driving sector, teams can use Lightning to run massive multi-camera and multi-sensor models across large GPU farms, ensuring safety-critical models are trained with the highest precision. Meanwhile, healthcare firms can leverage Lightning to fine-tune diagnostic imaging models, accelerating breakthroughs in disease detection and treatment personalization.

Hackathons and Prototyping

Lightning shines brightly in the high-energy, fast-paced environments of hackathons and rapid prototyping projects. Teams working under tight deadlines can skip over mundane engineering hurdles and focus on innovation, creativity, and delivering minimum viable products (MVPs). By reducing boilerplate, participants can explore cutting-edge architectures, experiment with advanced regularization techniques, and integrate novel datasets without worrying about writing (and debugging) infrastructure code.

This speed advantage often spells the difference between winning a competition or simply participating. It also equips early-stage startups with the agility to bring new ideas to market ahead of competitors.

Teaching and Education

Educational settings—from university classrooms to online courses and bootcamps—benefit tremendously from Lightning’s structured approach. Instructors can guide students through the principles of deep learning without burdening them with the intricacies of hardware management or error handling.

By abstracting away repetitive tasks, students can focus on the why and how of neural networks, gaining a deeper understanding of core concepts like backpropagation, gradient descent, and overfitting. This approach not only enhances comprehension but also boosts students’ confidence in tackling real-world machine learning challenges.

Large-Scale Deployments

For enterprise-level deployments, where models must scale across massive datasets and hardware clusters, Lightning’s Fabric module delivers the performance and customization necessary to meet demanding requirements. Businesses running complex pipelines—such as natural language processing for real-time translation or recommendation engines analyzing millions of user interactions—can leverage Lightning’s distributed training capabilities to optimize their systems efficiently.

Additionally, Lightning’s integration with logging and monitoring tools ensures that engineers can track performance metrics, identify bottlenecks, and perform detailed error analysis at every stage of production.

Resources

- Medium. From PyTorch to PyTorch Lightning: A Gentle Introduction.

- Lightning AI Docs. PyTorch Lightning Introduction.

- DataCamp. PyTorch Lightning Tutorial.

- Towards Data Science. PyTorch Lightning & Optuna Multi-GPU Hyperparameter Optimisation.

- PyPI. PyTorch Lightning Package.