The first time I watched a model confidently deny a perfectly qualified applicant, my brain immediately asked: “Okay… but why?” That question is exactly where Explainable AI steps in. In the broader technology category—where algorithms now help approve loans, flag fraud, suggest medical diagnoses, and guide vehicles—clarity isn’t a luxury; it’s a requirement. Explainable AI is the difference between “the system said so” and “here’s the evidence the system used, here’s what mattered most, and here’s what could change the outcome.”

When people can understand the reasoning, they can challenge it, correct it, and trust it—especially when the stakes are high. And in a world sprinting toward more automated decisions, learning how this transparency works helps teams build safer products, regulators create smarter rules, and everyday users feel less like they’re being judged by a mysterious machine behind a curtain.

What is Explainable AI?

Explainable AI refers to methods and systems that make an AI model’s decisions understandable to humans. Instead of acting like a sealed “black box,” the model can provide reasons—such as which features mattered most, what evidence influenced the result, or how changing an input might alter the outcome. You’ll also hear Explainable AI described as “interpretable AI” or “transparent AI,” because the goal is to help humans see how a model thinks (at least in a practical, decision-focused way) without needing a PhD to decode it. This matters not only for trust, but for accountability: when a model affects health, money, or safety, people need explanations that can be tested, audited, and discussed.

Breaking down of Explainable AI

At its core, Explainable AI exists because modern machine learning got too good at being complex. Deep networks can spot patterns humans miss, but they often struggle to justify themselves in plain language. That becomes a problem the moment an AI decision affects someone’s life: if you can’t explain why a system made a call, it’s hard to know whether it made the right call—or whether it learned shortcuts, bias, or noise.

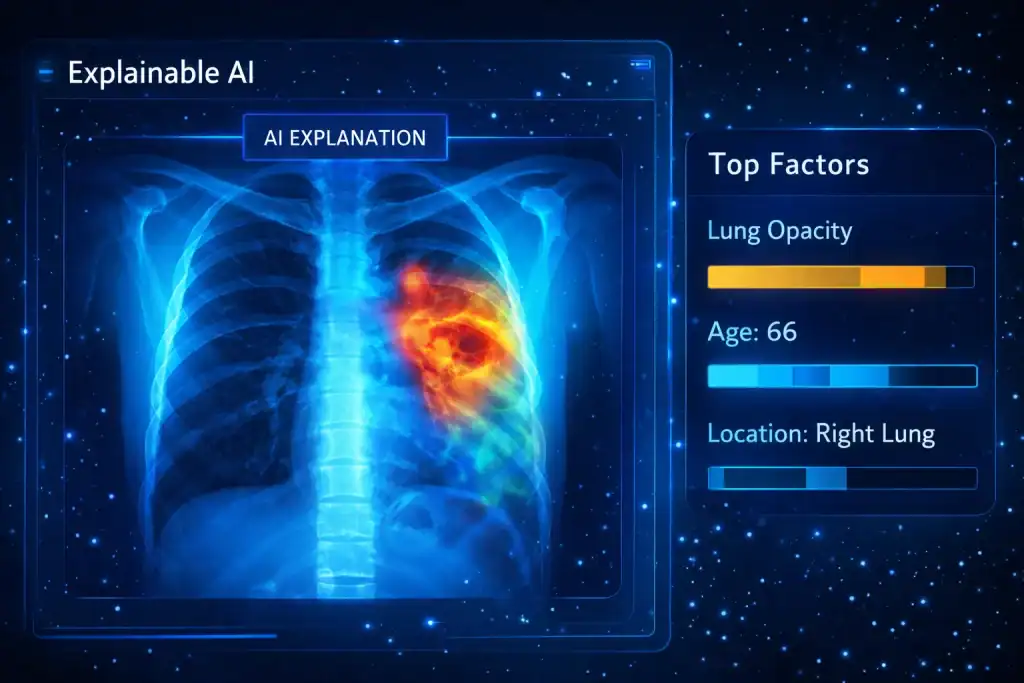

One easy way to understand the AI is to imagine two roles: the “predictor” and the “storyteller.” The predictor makes the decision (approve the loan, flag the X-ray, classify the transaction). The storyteller translates that decision into something a human can evaluate: “These factors contributed most,” “This variable was unusually high,” or “The model relied heavily on this region of the image.” Done well, that translation is not fluff—it’s a practical diagnostic tool.

A second helpful idea is that the AI is often about contrast. Humans don’t just want “why this?”—they want “why this instead of that?” For example: Why was one applicant approved while another was denied? Why did the model label this scan as high-risk rather than moderate? Explanations that offer comparisons are easier to act on because they give teams levers: adjust data, adjust thresholds, improve features, or correct bias.

Third, it strengthens the human–AI relationship. When explanations are clear, experts can apply their own judgment instead of blindly accepting outputs. A doctor can say, “That variable shouldn’t matter clinically,” or a risk analyst can spot a suspicious pattern like “ZIP code dominates the decision.” That feedback loop can improve models over time, because the explanation becomes a debugging map—not just a justification.

Finally, it also helps bridge trust gaps created by fast adoption. Many organizations want the benefits of automation, but they also fear headlines about unfair decisions or unsafe behavior. Explanations provide a middle path: adopt powerful models while still maintaining oversight, auditability, and confidence. In practice, the best results come when explainability is treated like a product feature—tested with real users, refined for clarity, and measured for usefulness—rather than a last-minute box to tick.

History of Explainable AI

It has roots in early expert systems, where rules were explicit and traceable, but the need became urgent once complex models became common. As AI entered healthcare, finance, and law, concerns about bias, safety, and accountability pushed researchers and organizations to create tools that could clarify decisions from opaque models. Today, it is often discussed alongside responsible AI practices, because explanation supports transparency, compliance, and trust.

| Time Period | Key shift | Why it mattered |

|---|---|---|

| Mid-20th century | Rule-based AI and expert systems | Decisions were explainable by design |

| 1980s–2000s | Statistical learning grows | Models become less intuitive to interpret |

| 2010s | Deep learning boom | Accuracy rises; “black box” concerns explode |

| Present | Broad adoption of XAI toolkits | Explainability becomes essential in high-stakes use |

Types of Explainable AI

Different approaches to Explainable AI focus on where explanation happens—inside the model or added afterward—and what kind of explanation the user needs.

Intrinsic (built-in interpretability)

This approach uses models that are understandable by structure, like linear models, decision trees, or rule lists. Explainable AI is “native” here: you can trace decisions directly, often with straightforward logic. These models are attractive when audits and clarity matter more than squeezing out the last bit of accuracy.

Post-hoc (explanations after training)

Here, a complex model is trained for performance first, and explanation tools are applied afterward. Explainable AI methods like feature attribution, local surrogate models, or counterfactual explanations help users interpret decisions without replacing the high-performing model itself.

Example-based explanations

Some systems explain decisions by showing similar prior cases (“this looks like these examples”), which can be highly intuitive. This style of Explainable AI is especially helpful when stakeholders prefer evidence they can visually compare.

| Type | How it explains | Typical fit |

|---|---|---|

| Intrinsic | Transparent structure | Regulated or audit-heavy settings |

| Post-hoc | Attributions, local models | Complex deep learning systems |

| Example-based | Similar cases | Human-centered review workflows |

How Does Explainable AI Work?

In practice, it works by attaching interpretable signals to a prediction. Depending on the method, it might rank which inputs mattered most, highlight influential regions in an image, show “what would need to change” for a different outcome, or provide a simplified local approximation of the model near a single decision. Many teams combine visual cues, numerical scores, and short narratives so explanations are both precise and readable. The goal isn’t to reveal every hidden weight inside a neural network—it’s to give humans a reliable, testable explanation that supports oversight, debugging, and accountability. When done right, it becomes a practical interface between complex mathematics and real-world decision-making.

Pros & Cons of Explainable AI

It is incredibly useful, but it comes with trade-offs: explanations can be misunderstood, oversimplified, or mistaken for “truth” rather than helpful evidence. Still, it’s a major step forward for safer deployment of advanced technology in high-stakes settings.

| Pros | Cons |

|---|---|

| Improves trust, accountability, and auditability | Explanations can oversimplify complex behavior |

| Helps detect bias and data leakage | Some methods add computational overhead |

| Makes debugging faster and more targeted | Risk of “explanation theater” if poorly designed |

| Supports better human–AI collaboration | Not all models are equally explainable |

Uses of Explainable AI

The best way to appreciate Explainable AI is to see where it prevents real-world damage and improves decisions—especially in systems that affect people directly. Below are common industry uses, framed in a human-first way, because explanation should serve the user, not the model.

Healthcare decision support

Hospitals and clinicians need more than accuracy—they need reasoning they can trust. Explainability helps clinicians understand what features influenced a risk score or diagnostic suggestion, making it easier to validate results, catch errors, and justify actions to patients and review boards. It’s not about replacing doctors; it’s about making model outputs reviewable and accountable.

Finance, lending, and fraud

Financial institutions often must justify decisions to customers and regulators. Explanations can clarify what drove a credit decision, which behaviors triggered fraud alerts, or why a transaction was flagged. This is crucial for compliance and for reducing the frustration customers feel when they’re denied with no meaningful reason.

Autonomous systems and safety-critical tools

In robotics and self-driving research, explanations can help engineers identify failure modes and validate that a system relied on sensible cues rather than shortcuts. Even when a model performs well overall, explainability can uncover rare but dangerous edge cases.

Smart infrastructure and connected environments

As iot devices spread into cities, factories, and utilities, more automated decisions affect physical operations. Explainability can reveal why a system rerouted traffic, changed energy loads, or flagged a sensor anomaly—critical for safety and reliability. This is where Innovation becomes operational: transparent decisions are easier to trust, maintain, and improve over time.

One generic organic keyword people often search here is model transparency, and that’s exactly the practical benefit: clearer reasoning that humans can verify.

Resources

- Unite.AI. What is Explainable AI?

- Techopedia. Explainable Artificial Intelligence (XAI)

- TechTarget. Explainable AI (XAI)

- IBM. Explainable AI

- BuiltIn. What is Explainable AI? How Transparent AI Models Help Humans Understand Decisions