Let’s face it, natural language processing is reshaping how we interact with technology, and Hugging Face Transformers are leading the charge in this revolution. These powerful tools have become essential for developers and researchers who need to process and understand human language through machines. From powering chatbots and virtual assistants to enabling sentiment analysis and automatic summarization, their applications are vast and growing every day.

So why this review? As someone deeply involved in machine learning projects, I’ve used various frameworks and libraries, but Hugging Face consistently stands out. Its accessibility, depth of features, and strong community support make it unique. Whether you are a hobbyist eager to explore AI or a seasoned engineer building enterprise-grade solutions, understanding what this tool offers can be a game-changer. This review dives deep into its capabilities, comparing it with other platforms and offering real-world insight into its strengths and challenges. Let’s explore what makes Hugging Face Transformers so impactful today.

Overview of Hugging Face Transformers

When it comes to modern natural language processing, Hugging Face Transformers have become the go-to solution for developers and data scientists alike. These models are pre-trained on vast amounts of data, making them capable of performing advanced tasks such as sentiment analysis, machine translation, text summarization, and question answering with impressive accuracy. What sets them apart is their adaptability across various domains and languages, which helps streamline NLP workflows significantly.

At the core of this framework are cutting-edge model architectures including BERT, GPT, RoBERTa, DistilBERT, and more. The platform provides easy access to these through a unified API, allowing seamless deployment whether you’re using TensorFlow or PyTorch. Developers can quickly integrate these models into their projects without the need for intensive training processes.

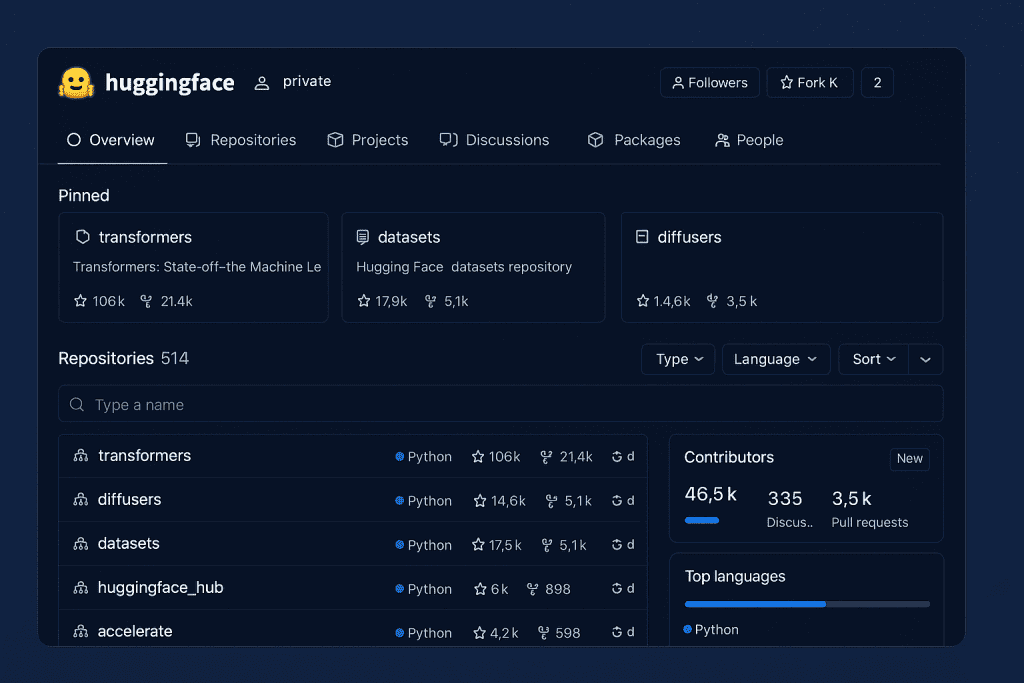

Additionally, the vibrant open-source community and clear documentation make it approachable for beginners while offering advanced features for experts. From the Transformers GitHub repository to Hugging Face’s model hub, everything is designed to accelerate NLP development efficiently.

In-Depth Analysis of Hugging Face Transformers

Diving deep into the platform’s capabilities reveals how advanced technology can be made accessible to all

Performance & Scalability

Hugging Face Transformers are built for performance. Whether you’re fine-tuning a model on a personal laptop or deploying across multiple GPUs in a cloud environment, they deliver consistent and efficient results. These models are optimized to leverage hardware acceleration, allowing them to process massive datasets without significant slowdowns. Performance benchmarks often show Hugging Face models outperforming or matching other leading NLP frameworks, especially when it comes to real-time text generation or classification. Thanks to support for both CPUs and TPUs, they can scale effortlessly from small tasks to enterprise-grade workloads. This makes them an ideal choice for organizations seeking dependable, high-performance NLP tools.

Ease of Use

One of the strongest advantages of Hugging Face Transformers is its simplicity. With a single pip install transformers command, you’re ready to go. The API is straightforward, with functions and methods clearly named, making it intuitive even for beginners. Loading a model and generating predictions can be done in just a few lines of code. Moreover, integration with existing tools like Pandas, Numpy, and Sklearn makes it even more developer-friendly. Tutorials and guides are plentiful, so users can go from zero to productive quickly. This ease of use helps streamline development and encourages experimentation without a steep learning curve.

Design & Interface

Although Hugging Face is primarily a code-based platform, it offers an intuitive and polished web interface that enhances the user experience. The model hub is neatly organized with filters to search by task, language, and library. Each model’s page includes a full description, tags, license, example code, and even an option to test the model right in the browser. This design empowers users to evaluate models before integrating them into applications. The thoughtful layout saves time by making everything from documentation to example outputs accessible in one place. It’s a practical touch that reflects a deep understanding of developer needs.

Community & Support

Hugging Face has built one of the most active and welcoming communities in the AI world. Whether you’re a beginner stuck on your first model or an expert looking to optimize performance, there’s a good chance your questions are already answered in forums or GitHub discussions. The official documentation is regularly updated and enriched with examples, covering a wide range of use cases. Developers also benefit from frequent model updates and new releases through the Transformers GitHub repository. Live events, webinars, and contributions from leading AI researchers further cement Hugging Face as a community-first platform.

Hugging Face Transformers Comparison

To better understand its place in the NLP landscape, it’s helpful to compare Hugging Face Transformers with other major tools like OpenAI’s GPT API, Google’s T5, and Microsoft Azure ML NLP services. Each offers unique strengths depending on your project goals.

| Feature | Hugging Face Transformers | OpenAI GPT API | Google T5 | Microsoft Azure NLP |

|---|---|---|---|---|

| Open-source | Yes | No | Yes | No |

| Model Hub | Yes | Limited | Yes | Limited |

| Pretrained Support | Extensive | Moderate | High | Basic |

| Community & Docs | Excellent | Good | Good | Moderate |

| Cost | Free (unless hosted) | Paid | Free | Paid |

Hugging Face Transformers Pros and Cons

Before diving in, here’s a quick snapshot of the highs and lows

| Pros | Cons |

|---|---|

| Extensive model library | Can be overwhelming for beginners |

| Easy to use with PyTorch & TensorFlow | Some models require high computational power |

| Active open-source community | Limited UI for non-developers |

| Frequent updates and innovation | Lacks advanced visual dashboards |

Conclusion

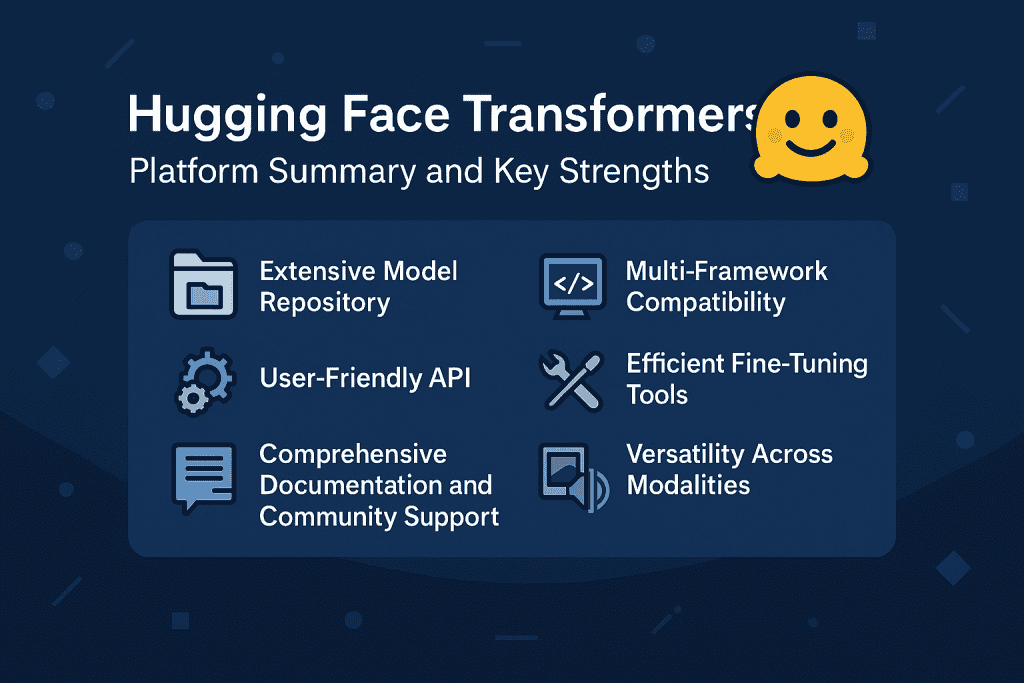

Hugging Face Transformers is not just another tool in the NLP ecosystem. It is a well-rounded platform that combines cutting-edge model architectures with user-friendly design and robust community support. What makes it stand out is its balance of power and simplicity. Whether you’re developing a complex AI system or just exploring machine learning for the first time, Hugging Face provides everything you need to build, train, and deploy NLP models with confidence.

The ability to access thousands of pre-trained models, along with seamless integration into Python environments, accelerates development cycles significantly. It is also backed by one of the most engaged communities in the field, which ensures continued innovation and reliability. For anyone working in natural language understanding or generation, this platform is more than a recommendation. It is a must-have in your AI toolkit.

Hugging Face Transformers Rating

Curious about our final verdict? We scoured the platform and combed through user feedback. Based on overall experience

⭐️⭐️⭐️⭐️⭐️ 4.8/5 – Highly Recommended

FAQ

What are Hugging Face Transformers used for in tech trends today

They power a wide array of NLP applications such as chatbots, translation, sentiment analysis, and summarization, placing them at the heart of today’s tech trends.

How do Hugging Face Transformers compare to other NLP frameworks

They stand out for their user-friendliness, open-source access, and integration with Hugging Face AI tools, making them more accessible than many alternatives.

Can I use Hugging Face Transformers for commercial projects

Yes, with proper licensing. Many models on the platform are free for commercial use, especially those marked under open licensing, ideal for Innovation-driven ventures.

Resources

- Hugging Face. Transformers Documentation

- GitHub. Transformers Codebase

- Google AI. Inference with Hugging Face

- Towards Data Science. Choosing Hugging Face Models

- GitHub. Transformers Release Notes

- Microsoft. Train Models with Hugging Face